YES AND: Designing AI agents that take turns naturally

The Problem With Access to Diverse Expertise

Organisations face a persistent challenge: while diversity of thought leads to more creative problem-solving outcomes, individuals often lack access to varied expertise when they need it. Teams may be too small, experts too busy, or the right combination of perspectives simply unavailable. Current AI tools typically provide a single perspective with high confidence, functioning more as answer machines than thinking partners.

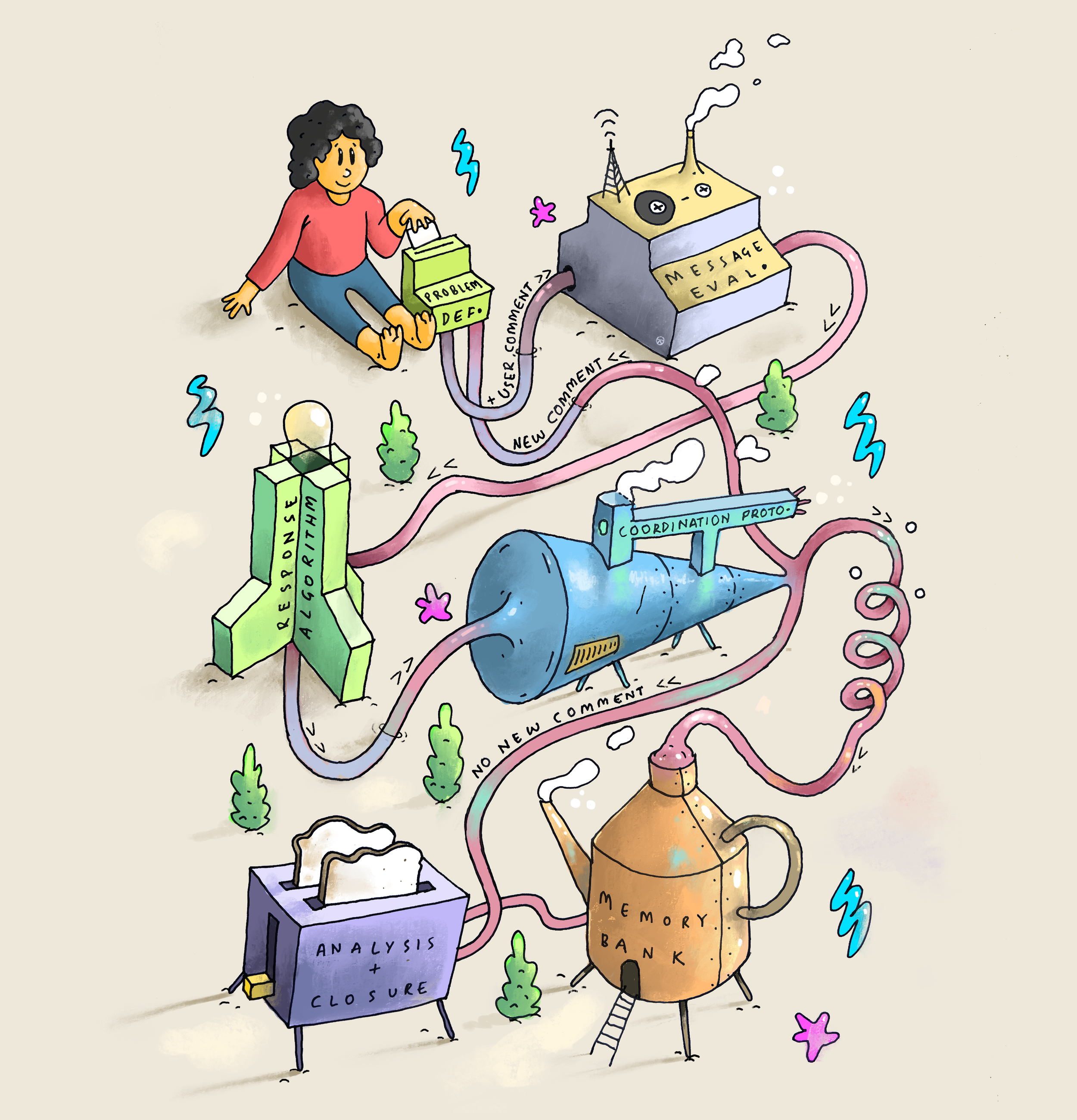

YES AND addresses this gap by creating a multi-agent framework where AI personas with distinct expertise engage in dynamic conversations with users, simulating the kind of diverse ideation that would normally require assembling a cross-functional team.

Research-Driven Problem Definition

The design process began with understanding why traditional ideation fails. The literature reveals several key issues:

Production blocking: individuals in groups can't contribute when others are speaking

Social loafing: reduced individual effort in group settings

Groupthink: pressure toward consensus that suppresses dissenting views

But existing AI systems exhibited their own failure modes. Systems like Solo Performance Prompting (SPP) and PersonaFlow demonstrated that multi-agent approaches could work, but they relied on rigid, pre-defined interaction rules that constrained organic idea development. The agents either followed scripted sequences or required explicit orchestration, limiting spontaneity and user agency.

The research phase identified a clear design goal: create a system that avoids the pitfalls of both human group dynamics and rigid AI interactions while maintaining user engagement in the creative process.

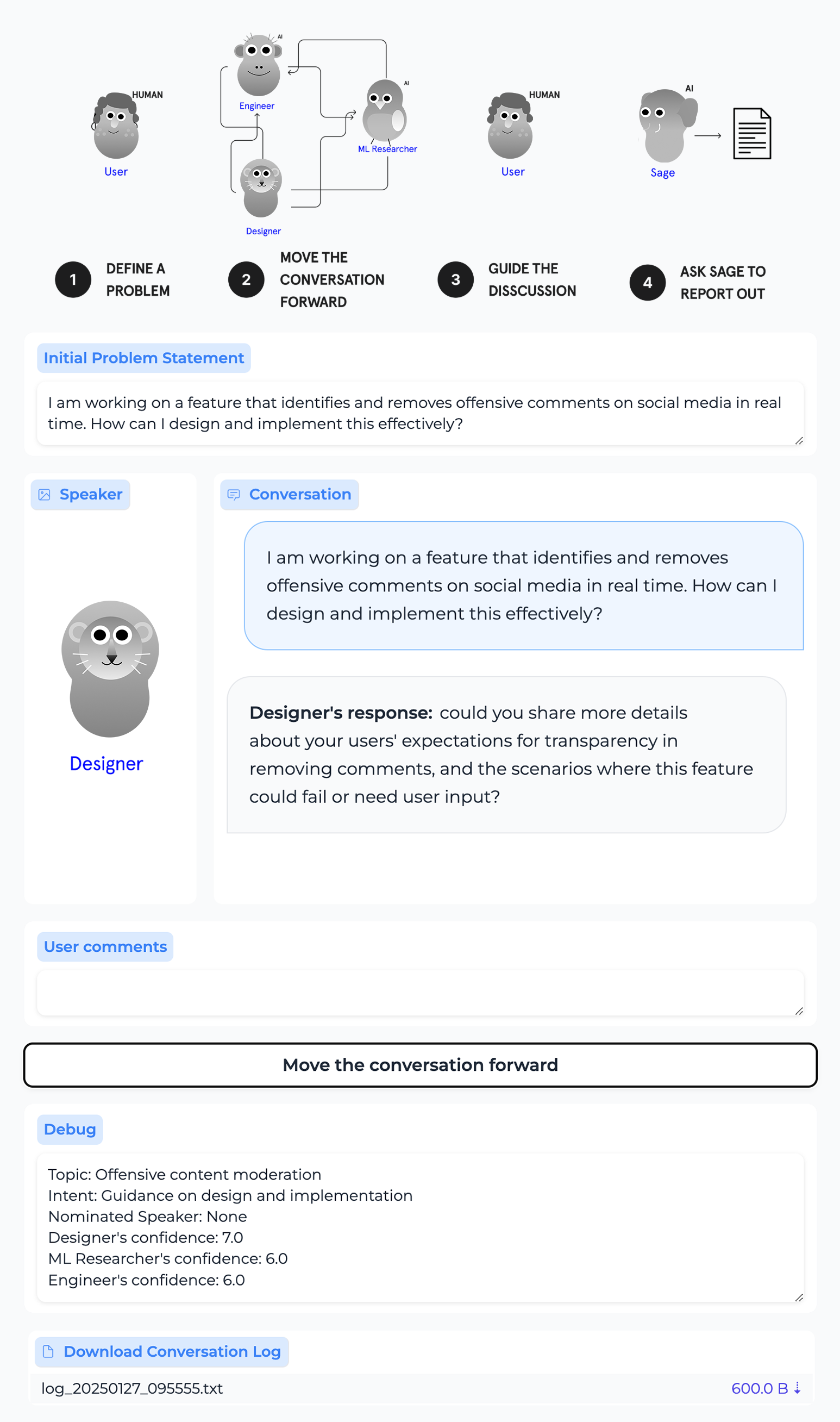

From Linear Sequences to Conversational Turn-Taking

V1: The Linear Approach

The initial prototype used a straightforward architecture: three persona-based agents (Designer, ML Researcher, Engineer) responded sequentially to user problems, followed by a summarizing agent (the "Sage"). Each agent received the full conversation history and was prompted to build on previous contributions within a 30-word constraint.

The technical implementation worked. Agents stayed in character, referenced each other's ideas, and produced coherent outputs. But the user experience revealed critical flaws:

No spontaneity or serendipitous idea development

Users couldn't interject or redirect the conversation mid-flow

Agents couldn't ask clarifying questions or challenge assumptions

The linear structure created a report-like output rather than a thinking process

V2: Implementing Conversation Analysis Principles

The redesign drew on Sacks, Schegloff, and Jefferson's conversation turn-taking model, which describes three mechanisms for speaker transitions:

Current speaker selects next speaker (nomination)

Next speaker self-selects (voluntary contribution)

Current speaker continues (extended turn)

Translating this to a multi-agent system required solving a fundamental technical problem: LLMs exhibit a strong verbosity bias and tend to generate responses even when they have little meaningful to add. Without intervention, agents would produce tangential or overgeneralized content that derailed conversations.

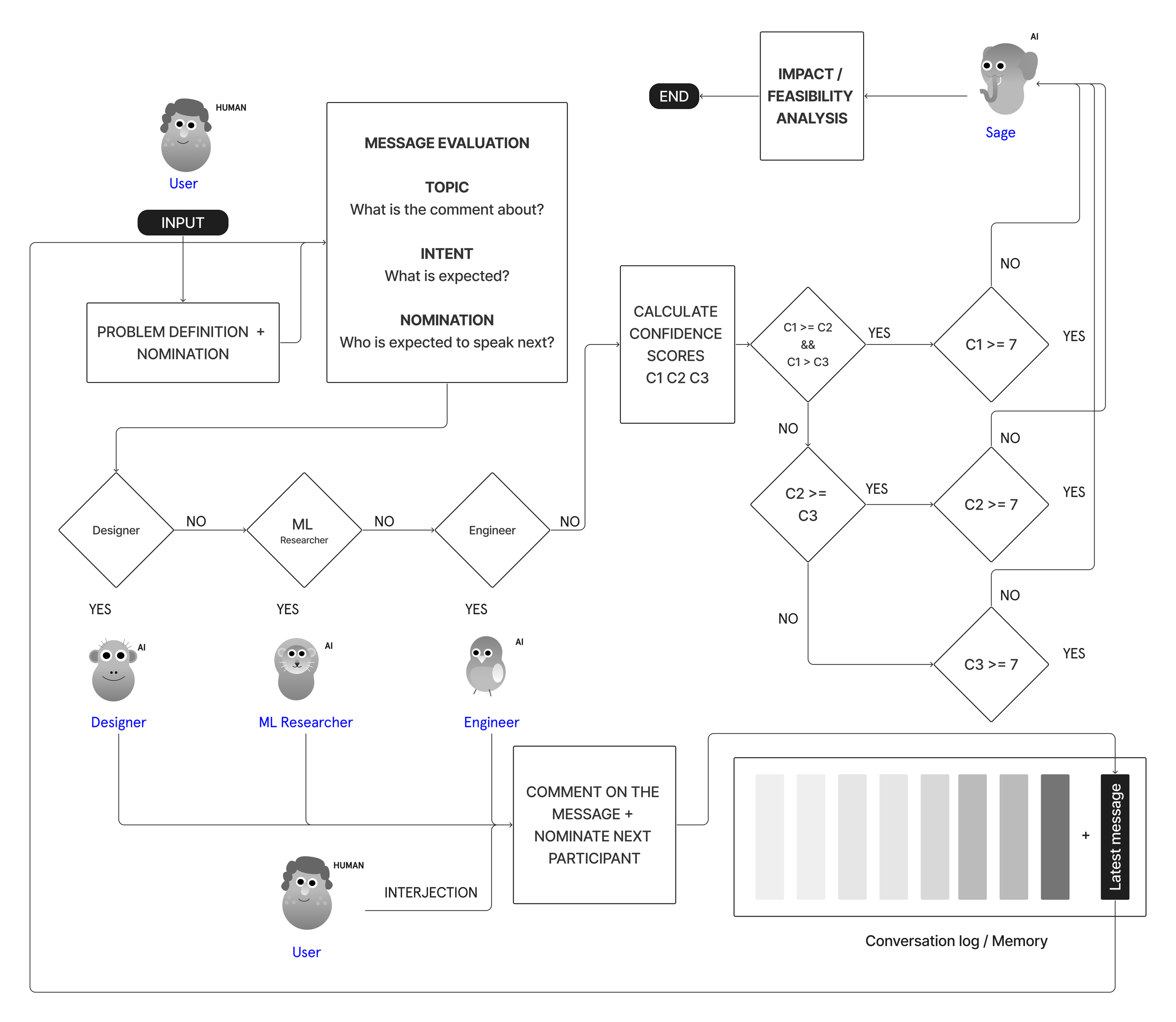

The Confidence Mechanism: Teaching Agents When to Speak

The core innovation is a confidence-based turn-taking system that gives agents autonomy over participation. Here's how it works:

Architecture:

Each agent receives the conversation history and latest message

A lightweight evaluation function prompts the agent to assess:

Topic: What is the message about?

Intent: What does the speaker want?

Confidence: On a 0-10 scale, how meaningfully can I contribute?

Agents only respond if confidence exceeds a threshold (set at 7)

If below threshold, agents output a minimal acknowledgment ("hmm")

The highest-confidence agent speaks; ties are resolved randomly

System Architecture

Prompt Engineering Details:

Each agent's system message defines their expertise domain and approach to problem-solving. For example, the Designer agent's prompt emphasizes user-centered methodologies and research-driven insights, while the Engineer agent focuses on implementation feasibility and technical constraints.

The confidence mechanism required iterative refinement:

Initial prompts without word limits produced verbose, unfocused responses (500+ words)

Adding strict word limits (30-50 words) forced conciseness but initially reduced technical depth

The final prompts balance word constraints with explicit instructions to "be technical and thorough" or "ask clarification questions when needed"

Example behavior:

User: "The button placement follows Fitts' Law."

Without confidence mechanism:

Agent: "Yes, Fitts' Law states that the time to acquire a target

is a function of the distance to and size of the target. By placing

buttons closer to the expected interaction area or making them

larger, you improve usability and reduce interaction time..." [continues]

With confidence mechanism:

Agent: "Hmm."

Topic: Usability principle. Intent: Stating a design observation.

Confidence score: 4.The agent recognizes the statement as factually correct but assesses that it cannot add meaningful value, so it stays quiet.

Technical Implementation and Conversation Dynamics

Memory and Context Management

The system maintains a shared conversation log with timestamps and speaker identifiers. Each message is appended to this log, which serves as the context window for all subsequent agent responses. While this approach has limitations for very long conversations (context window constraints become relevant), it proved sufficient for typical ideation sessions (10-20 exchanges).

The conversation log structure:

[Timestamp] Speaker: Message content

[Topic] [Intent] [Nominated Speaker] [Confidence Scores]User Agency and Conversation Control

The architecture preserves user agency through three mechanisms:

Direct nomination: Users can call on specific agents by name

Interjection: Users can interrupt agent-to-agent dialogue at any point

Early termination: Users can summon the Sage for summary when they have sufficient material

This design prevents the common failure mode where agent-to-agent conversations extend beyond usefulness or drift from the original problem.

Prompt Engineering for Conversational Behavior

Beyond confidence scoring, the prompts encourage specific conversational behaviors:

Asking clarification questions rather than making assumptions

Nominating other agents when their expertise becomes relevant

Building on or challenging previous contributions

Staying focused on the user's original problem definition

The Sage agent uses a distinct prompt structure that emphasizes synthesis rather than addition. It's instructed to create "a seed of a holistic approach" rather than a complete solution, reinforcing the philosophy that the user should develop the final solution themselves.

Design Principles That Emerged

1. Constraints Enable Creativity

Limiting response length and requiring confidence self-assessment prevented the verbose, meandering outputs typical of LLM conversations. Agents became more precise and purposeful in their contributions.

2. Autonomy Creates Natural Flow

Allowing agents to self-select based on confidence produced more organic conversation dynamics than rigid turn-taking rules. The Designer naturally dominated early user experience questions, while the Engineer became more active during technical feasibility discussions.

3. User-Centered AI Means Preserving Agency

The most critical design decision was keeping the user central to the ideation process. The agents augment thinking rather than replace it. This addresses concerns about cognitive offloading and skill atrophy that can result from over-reliance on AI assistance.

Limitations and Future Directions

The current system has several constraints worth noting:

Predefined personas: The three expert roles may not align with every problem domain. Dynamic persona generation based on problem analysis could improve relevance.

Context window management: As conversations extend, maintaining relevance across long histories becomes challenging. More sophisticated memory architectures or attention mechanisms could help.

Single-user limitation: The system currently supports one user with multiple agents. Multi-user scenarios with collaborative ideation remain unexplored.

Evaluation: The framework lacks formal user testing. Assessing its impact on creativity, engagement, and ideation quality is essential next work.

Technical Implications for AI System Design

This project demonstrates that conversation dynamics matter for AI systems. Rather than optimizing only for answer quality or task completion, designing for natural turn-taking, appropriate participation, and user agency creates more effective thinking tools.

The confidence mechanism, while simple, suggests a broader design pattern: AI systems should be able to assess not just what they know, but whether their contribution is contextually valuable. This moves beyond factual accuracy toward conversational appropriateness.

This project was conducted at Microsoft Research and will be presented at CHI 2025 Yokohama Japan.